One common question statisticians face is, “How big of a sample do I need?” Answering this question isn’t straightforward and depends on various factors and assumptions.

The first thing a statistician will ask is, “What do you mean by ‘how large a sample’—large for what purpose?” For instance, detecting a very small difference, like 0.01, between two means requires a much larger sample size than detecting a larger difference, say 1.0. The goal, especially in testing for a difference between two means, is to use a sample size large enough to construct a confidence interval for the mean that allows for detecting the required difference. Essentially, the aim is to select a sample size (denoted as “n”) large enough so that the test statistic exceeds the critical value.

Let’s start by examining the simplest scenario to grasp the fundamental concepts before delving into more complex situations. This scenario involves the one-sample t-test, where the numerator of the test statistic is (x – μ).

Deciding on the sample size for any experiment involves a blend of statistical analysis and practical judgment. Often, the sample size is determined by factors like the number of available subjects, budget constraints, or time limitations. Even when practical constraints dictate the sample size, conducting sample size calculations can still be valuable. These calculations help assess whether the experiment or survey is worthwhile by indicating the size of differences one can likely detect with the proposed sample size. This information allows researchers to evaluate whether the expected outcomes meet the goals of the study.

In a well-designed study, it’s crucial to determine the sample size required to detect meaningful differences beforehand. For instance, a study examining 71 negative clinical trials in medical literature discovered that 50 of these trials would have overlooked a 50% improvement due to inadequate sample sizes. Another study analyzing 67 published clinical trials found that only 12% of them adequately addressed sample size considerations.

Opting for an excessively large sample size can lead to increased workload and potential wastage of resources. Conversely, choosing a sample size that’s too small might result in missing important differences or failing to detect any differences altogether. In cases where the sample size is too small, the experiment may yield no useful information, rendering the effort, resources, and participants or materials involved completely wasted.

For surveys, insufficient sample sizes can produce results indicating significant differences that are actually not statistically significant. In situations involving animals, both using too few or too many animals can be considered unethical.

Here are a couple of real-life examples where errors occurred due to inadequate consideration of sample size determination:

Thalidomide Tragedy: In the late 1950s and early 1960s, thalidomide was widely prescribed to pregnant women to alleviate morning sickness. However, it was later discovered that thalidomide caused severe birth defects in thousands of babies. The tragedy occurred because the initial safety testing of thalidomide did not involve a sufficiently large sample size to detect rare but serious side effects. As a result, the harmful effects of the drug were not adequately identified before it was widely distributed.

False Positive Results in Medical Screening Tests: In medical screening tests, such as mammograms or prostate-specific antigen (PSA) tests, inappropriate sample sizes can lead to false positive results. For instance, if a small sample size is used in a study evaluating the effectiveness of a new screening test for breast cancer, the test may appear to be highly accurate in detecting cancer. However, when the test is applied to a larger population, its accuracy may decrease, leading to unnecessary anxiety and medical procedures for patients who receive false positive results.

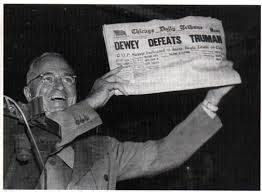

Public Opinion Polls: In political polling, sample size is crucial for accurately predicting election outcomes. If a pollster uses a small sample size, their results may not be representative of the entire population, leading to inaccurate predictions. For instance, in the 1936 U.S. presidential election, the Literary Digest conducted a poll with a large sample size but failed to account for selection bias, resulting in an incorrect prediction of the election outcome.

Product Testing: In 1985, Coca-Cola introduced “New Coke,” a reformulation of its classic Coca-Cola formula. The decision to reformulate the iconic soft drink was based on extensive taste tests involving a relatively small sample size of consumers. These taste tests overwhelmingly favored the new formula over the original one.

However, when New Coke was launched nationwide, it faced immediate backlash from consumers. Despite the positive feedback from the taste tests, consumers expressed strong dissatisfaction with the new flavor, leading to widespread protests and boycotts.

The failure of New Coke was attributed in part to the small sample size used in the taste tests, which did not accurately represent the preferences of Coca-Cola’s broader consumer base. While the taste tests indicated that the new formula was preferred by those sampled, it failed to account for the emotional attachment consumers had to the original Coca-Cola formula.

These examples illustrate how inadequate sample size determination can have practical consequences in various fields, ranging from political polling to product testing and manufacturing. By carefully considering sample size requirements, researchers and practitioners can minimize errors and produce more reliable results.

Sample size for a single sample

First, let’s talk about testing a single sample. We’re checking if the sample’s average (represented by μ) matches a specific value (μ₀) or not. We’re assuming we already know the population’s standard deviation (σ). If our sample size is big enough, we can use the normal distribution. So, our confidence interval for the average (μ) is calculated using the formula: x ± z₁₋ₐ/₂σ/√n. Here, z₁₋ₐ/₂ is a value from the normal distribution based on the level of significance (denoted by α). This interval stretches above and below our sample average (x) by a certain distance (D), where D = z₁₋ₐ/₂σ/√n. This means that if we’re testing at a certain level of significance (α), we won’t be able to detect any difference smaller than D away from our sample average (x).

Now, let’s consider a scenario where we take a sample from a population with an average of μ₁ and test if it’s equal to μ₀. We can only reject the null hypothesis if the difference between μ₀ and μ₁ is bigger than half of the width of the confidence interval, which is D. So, D represents the smallest difference we can detect at a certain level of significance (α), given σ and the sample size (n).

As we increase the sample size (n), the confidence interval becomes narrower because the square root of n in the denominator increases, meaning we’re dividing by a larger number. Consequently, with larger sample sizes, we can detect smaller differences. This highlights the importance of specifying the size of the difference we aim to detect when determining the required sample size.

When we talk about determining if two groups are different, we’re not aiming to prove that their estimated means are exactly the same (which is practically impossible due to minute variations). Instead, we’re using the samples to understand what’s happening in the underlying populations. Our focus is on detecting a “real” difference that holds practical significance. Typically, we have a range of differences in mind that would be meaningful or beneficial.

Consider the case of testing a new fertilizer’s impact on tomato plant yield compared to a standard fertilizer. We’re interested in whether it leads to a higher yield, specifically by at least 2 kilograms per plant per season, as anything less wouldn’t justify the cost. However, we’re not concerned with detecting an unrealistically large increase, such as 50 kilograms.

Our goal is to determine a sample size large enough to detect a 2-kilogram increase. If this requires, for instance, 50 plants, the experiment is feasible. But if it demands an impractical sample size, like 5 million plants, the experiment may not be feasible. We also need to consider practical limitations, like available land and plant spacing.

The formula linking sample size to the size of the effect (in a single-sample scenario) is:

Let’s break down each element of the formula:

Sample size (n): This is usually what we’re trying to figure out. Sometimes, practical limitations determine the sample size, and we’re interested in knowing the smallest effect (D) we can detect with the given sample size.

Size of effect (D): This is the smallest effect that’s worth detecting, meaning any larger effect is also valuable. It heavily influences the sample size needed. It’s essential to note that statistical significance doesn’t always align with practical importance. Statistical significance focuses on the likelihood that random chance alone could produce a difference of this size, while practical importance concerns the usefulness of that difference. With a large enough sample, we can detect very small differences, but these might not be practically meaningful. For example, detecting a slight increase in exam scores might not be worth the effort of extra lessons.

Significance level (α): This indicates the risk you’re willing to take of incorrectly concluding there’s a difference when there isn’t one (type I error).

Standard deviation (σ) of the population: This is the standard deviation of the population assumed under the null hypothesis, often representing the standard treatment. It can be estimated from previous data, scientific literature, or personal knowledge. Estimating this parameter can be challenging, especially without prior studies in the area. One might want to use the estimated standard deviation from the sample, but it’s a catch-22 situation since the sample can’t be drawn until the sample size is specified. Exploring the effect of varying the assumed standard deviation can be helpful, looking at its implications on sample size (n) or the size of effect (D).

One solution to this issue is to estimate the standard deviation from a pilot survey. However, conducting a pilot survey requires permission and funding, and sample size calculations are necessary for it as well. Additionally, small surveys may not provide accurate estimates of the standard deviation, and these estimates might be less useful than educated guesses. Therefore, this approach is often impractical.

Another approach is to specify the ratio D/σ instead of both D and σ, indicating that we aim to detect an effect of D/σ standard deviations. However, this approach may not always be helpful in many situations.

There’s one scenario where knowledge of the standard deviation isn’t needed, and that’s when dealing with proportions, but this will be dealt with in the course on Proportions.

It might be surprising, but the size of the population (N) doesn’t factor into sample size calculations. This is because populations are usually large compared to sample sizes. In such cases, the ratio of the sample size to the population size (n/N) is so small that even doubling the sample size doesn’t make a significant difference. Population size only becomes important if it’s small relative to the sample size, like when you need to sample a significant portion (e.g., 10% or 20%) of the population.

Situations where population size matters more include auditing, sampling rare species, or estimating the proportion of time a rare event occurs. For instance, when testing for botulism in a batch of tins where it’s rare (maybe 1 in 1000), you might need to sample a large portion of the batch (like 80%) to be certain of detecting a contaminated tin, which may not be feasible.

For auditing or when dealing with rare populations, more advanced statistical methods are needed. These scenarios aren’t covered in this text, but resources like Cochrane and Fleiss et al. provide further insights.

Example 3.1 (Continuing from example 2.2)

In the previous example about piglets fed a specific diet, let’s say we want to detect a difference of 50 grams in weight gain compared to a standard diet with a gain of 200 grams. This means we want the confidence interval to be narrow enough to detect a 50 gram difference in either direction. In other words, we want the difference (D) to have a maximum of 50 grams.

To find the number of piglets (n) needed for the experiment, we set up an equation: 50 = z₁₋ₐ/₂σ/√n. We still need to choose values for σ and α. In our previous assumption, we knew the population standard deviation (σ) was 120 grams, so we’ll use that. If we want to test at the 5% level (implying α/2 = 0.025), this corresponds to z₁₋ₐ/₂ = 1.96.So, we want to find the sample size (n) such that:

Thus, we need a sample size of 23 to detect a 50-gram difference at the 5% significance level, assuming a population standard deviation of 120 grams. The rounding up ensures that we have at least 22.13 units, and since we can’t have a fraction of a unit, we round up to 23.

When the experiment is conducted, the assumed population standard deviation is rarely used. Instead, the sample standard deviation is utilized along with the t-test:

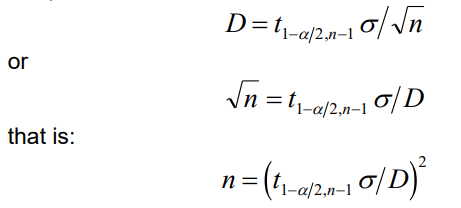

The nul

l hypothesis is rejected if the test statistic exceeds (in absolute value) the critical value t1−α/2,n−1. Therefore, the z1−α/2 in the previous calculations should be substituted with t1−α/2,n−1, leading to the equation:

This introduces a complication because the critical value for the t distribution varies with sample size. Therefore, we need to obtain the solution through iteration, trying several values, rather than a straightforward calculation as before. Here’s how we proceed:

- Let n1 be the sample size obtained using the critical value from the normal distribution, and n2 be the correct estimate.

- We know that the critical value from a t-distribution is larger than that from the normal distribution, eventually becoming equal for large sample sizes.

- Therefore, the sample size obtained using the t-distribution will be larger than or equal to that obtained from the normal distribution.

- Thus, the estimate of the sample size obtained using the normal distribution will be smaller than the final sample size estimate.

- Using the sample size n1, determined from the calculation using the normal distribution critical value, we look up the corresponding critical value for the t distribution. This results in a t critical value larger than the one for the correct sample size: 1−α/2,n1−1>t1−α/2,n2−1.

- Therefore, using n1 will give us a sample size estimate n∗ that is too large, and the true sample size will lie between n1 and n∗.

- We start with n1, find n∗∗, and then try numbers in between to find n∗. The final estimate is reached when both sides of the equation are satisfied.

Continuing from Example 3.1:

Starting with the suggested sample size of 23, we calculate the value of t0.975,22 as 2.074. Using this value, we find n=(2.074)(120n=(2.074)(120)/50=4.9776, which rounds to an estimated n of 25.

With n=25, the value of is 2.064, resulting in

which also rounds to 25. Since this aligns with our initial estimate, 25 is the final answer.

This example illustrates the utility of these calculations when the sample size is predetermine.

Consider a scenario where only 5 subjects can be obtained for an experiment. If you aim to minimize the risk of wrongly rejecting the null hypothesis to 5% and are interested in detecting differences in either direction, the formula for the minimum detectable difference becomes:

D=t0.975,4nσ

This simplifies to:

or

D=2.7765σ

This means you’ll be able to detect a difference of 1.242 standard deviations. For instance, if the population standard deviation is assumed to be 2 units, you can detect a difference of 2.48 units. However, if the population standard deviation is 4 units, you can only detect a difference of 4.97 units. Based on this, you can evaluate whether detecting differences of this magnitude aligns with the goals of your experiment.

Power of a test

In addition to considering the probability of Type I error (denoted by α), it’s important to include the power of the test in sample size calculations. The power of the test, denoted by 1-β, represents the probability of correctly detecting a difference if it truly exists.

Up to this point, we’ve focused on the likelihood of wrongly rejecting the null hypothesis when it’s true, known as Type I error. This occurs when we observe a significant test statistic purely by chance, even though there’s no real difference in population means. However, there’s another kind of error we need to consider: Type II error. This occurs when we fail to reject the null hypothesis when it’s actually false, meaning there is a genuine difference between population means. Type II error is represented by β.

The possible decisions and the implications may be shown as:

In Figure 3.1, we can observe the implications of Type I and Type II errors in hypothesis testing. Figure 3.1(a) displays a normal distribution with a mean of 10 and a standard deviation of (0.5)². When testing against a two-sided hypothesis (“≠ 10”), critical values are determined as the mean ± 1.96 times the standard deviation. If the null hypothesis is true, we expect the test statistic to fall outside this range about 5% of the time, leading to a Type I error.

Now, consider Figure 3.1(b), where the true distribution is a N(11, (0.5)²) distribution. Using a critical value of 10.8 from the N(10, (0.5)²) distribution, we’ll reject the null hypothesis if our test statistic exceeds 10.98. However, around half of the values from this distribution fall below 10.98, resulting in a Type II error where we fail to reject the null hypothesis despite a true difference in means.

Figures 3.1(c) and 3.1(d) depict normal distributions with means of 10 and 11, respectively, and a smaller standard deviation of 0.25. Here, the likelihood of missing a true difference decreases, highlighting the impact of standard deviation on hypothesis testing.

In determining the power of the test (1-β), we aim for a small α (probability of Type I error) and a large (1-β) (probability of correctly detecting a difference). To account for power in sample size calculations, critical values like z1-α/2 or t1-α/2 are replaced by adjusted values that incorporate both α and (1-β).

Continuing from Example 3.1:

Using the normal distribution cutoffs and a standard deviation of 120, we replace z1-α/2 by (z1-α/2 + z1-β), then setting the power (1-β) to 90%, we find β=0.1 and z1-β = 1.28. Thus, we replace the previously used 1.96 by 1.96+1.28, resulting in 3.24. Solving for n gives:

n = (3.24)(120)/50 = 7.776,

which yields n = 60.466, rounded to 61. Therefore, we’ll need a sample size of 61 instead of 23 to minimize the risk of Type I error to 5% and ensure a 90% chance of detecting a difference if it exists.

Sample size for the two sample case

For two samples, each with sizes n1 and n2 respectively, the width of the confidence interval is given by:

Setting this equal to D (the size of the difference between the treatments we want to detect) and solving yields:

Including the power, the equation becomes:

NB (In the context of the sample size calculation, the formula remains the same whether or not we include the power. The difference lies in how we interpret the result.

When we set the equation equal to D (the size of the difference between the treatments), we are calculating the sample size required to detect a specific difference with a certain level of confidence (usually determined by the significance level α).

When we include the power, we are considering the probability of detecting a difference that truly exists (1-β). In other words, we are not just interested in detecting any difference; we want to ensure that we have a high probability of correctly detecting a difference if it exists. This affects the determination of sample size because we may need a larger sample size to achieve a desired level of power.

So, while the formula remains the same in both cases, the interpretation and implications of the results differ based on whether or not we consider the power of the test.)

One typically sets n1 = n2 = n to minimize the total number of observations required and reduce errors resulting from using pooled estimates of the variance for populations with unequal variances. This simplifies the formula to:

The solution involves initially replacing t by z for an approximate solution. The sample size obtained is then used to calculate the t value to obtain an upper bound for the sample size. This process is repeated for different values of n until both sides of the equation agree.

Note that the sample sizes represent the actual number of treatment units, such as litters or groups, not the number of individual subjects. If the required sample size exceeds the available subjects, one can adjust various components such as the significance level, the specified difference worth detecting, or consider sampling strategies to realistically decrease variance assumptions.

Considering one-tailed tests instead of two-tailed tests can also reduce the required sample size, but this decision should be made carefully to avoid underestimating sample needs. Several books in the applied literature, such as those by Cohen (1977) and Odeh and Fox, discuss sample size calculations in detail. Additional sample size formulae for different tests will be covered as they arise.

Sample Size Determination Summary

In this section, we covered the fundamental aspects of sample size determination, a crucial step in the research process. We began by exploring the concepts of Type I and Type II errors, highlighting the significance of minimizing these errors in hypothesis testing. We then delved into the notion of statistical power, emphasizing its importance in detecting true effects and avoiding false conclusions.

Moving forward, we examined the intricate calculations involved in determining sample sizes, considering factors such as significance levels, effect sizes, and standard deviations. We discussed how these calculations evolve when considering two-sample comparisons and the inclusion of statistical power.

Throughout our discussion, we underscored the importance of aligning sample sizes with the objectives of the research, ensuring sufficient statistical power to detect meaningful differences while controlling for the risk of Type I errors. We also touched upon practical considerations, such as the availability of subjects and the need for realistic variance estimates.

In summary, sample size determination is a critical step in research design, balancing the need for precision with practical constraints. By understanding the principles and methods discussed in this section, researchers can make informed decisions and conduct studies that yield robust and reliable results.

More readings

- Cohen, J. (1977). Statistical power analysis for the behavioral sciences (Rev. ed.). Academic Press.

- Odeh, R. E., & Fox, B. J. (1985). Sample size choice: a review. The Statistician, 34(4), 277-287.

- Hulley, S. B., Cummings, S. R., Browner, W. S., Grady, D., & Newman, T. B. (2013). Designing clinical research. Lippincott Williams & Wilkins.

- Altman, D. G., Machin, D., Bryant, T. N., & Gardner, M. J. (Eds.). (2000). Statistics with confidence: Confidence intervals and statistical guidelines (2nd ed.). BMJ Books.

- Sample size determination in health studies: A practical manual. (1991). World Health Organization.